This is Part 2 in our "How to use Mint" series, where we use Mint, Elixir's shiny new HTTP package, to create an Awesome Toolbox using the Github API.

In our last post (Part 1 - How to use Mint), we started building out the Awesome Toolbox, which takes an awesome list (a curated collection of awesome stuff on Github -- blog posts, videos, packages, libraries, tools) -- and annotates it with useful information about each repository.

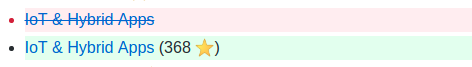

Thus far, we have gathered the contents of each README through the Github API using Mint. Now, our next step is to process the README by enriching each line with stats, specifically the Github repo star count.

Also, today we are really going to push the performance of our app using Mint. Spoiler: by the end, we'll be able to cut our processing time by more than a half!

The Goal:

To make an awesome list more awesome, we'll enrich it with more data, transforming it like the diff below:

A typical awesome list has more than 500 Github repos, and annotating it requires getting the stats of all those 500 repositories. Making 500 HTTP requests is not trivial and it will serve as a nice example to demonstrate the power of Mint.

Let's first create a function called Github.repo_info which will help us get the stats of one repository. Implementing this is pretty straight forward with the recv_response function that we set up in Part 01. recv_response will parse an HTTP response and give us the body of the response, which we then parse using a JSON decoder to get a map containing all the repository stats:

defmodule AwesomeToolbox.Github do

# ...

def repo_info(repo_full_name) do

# create an HTTP connection

{:ok, conn} = Mint.HTTP.connect(:https, "api.github.com", 443)

# make a GET request to get the repo info

{:ok, conn, request_ref} =

Mint.HTTP.request(conn, "GET", "/repos/#{repo_full_name}", [

{"content-type", "application/json"}

])

# read and parse the response

{:ok, conn, body} = recv_response(conn, request_ref)

# decode the JSON

json = Jason.decode!(body)

# close the HTTP connection

Mint.HTTP.close(conn)

{:ok, json}

end

# ...

end

Now that we have a function that can give us the star count of any repository, we just need to process the whole readme line by line.

We can annotate a single line by extracting the repository's full name, using the Github.repo_info function to get the star count, and then appending it to the original line. If we run into an error, we can return the untransformed line.

Here's the code:

@github_repo_rx ~r/https:\/\/github.com\/(?<repo_name>[0-9a-zA-Z._-]+\/[0-9a-zA-Z._-]+)/

@star ":star:"

defp annotate_line(line) do

# find the github repo link

with %{"repo_name" => repo_name} <- Regex.named_captures(@github_repo_rx, line),

# get the star count

{:repo_info, {:ok, %{"stargazers_count" => stargazers_count}}} <-

{:repo_info, Github.repo_info(repo_name)} do

# append it to the link

Regex.replace(

~r/(\(?https:\/\/github.com\/#{repo_name}\#?[0-9a-zA-Z._-]* *\)?)/,

line,

"\\1 (#{stargazers_count} #{@star})"

)

else

# in case of an error log and return the unchanged line

{:error, error} ->

Logger.error("ANNOTATE_LINE_ERROR: #{inspect(error)}")

line

{:repo_info, err_resp} ->

Logger.error("ANNOTATE_LINE_ERROR: #{inspect(err_resp)}")

line

# if we don't find a github link, return the unchanged line

_ ->

line

end

end

Now that we have a function to transform a single line of an awesome list, it is pretty straightforward to use it to transform all the lines.

The annotate_readme function below takes a Github repo name (like sindresorhus/awesome), gets the contents of the README file, splits it on newlines, and takes each line that contains a link to a Github repo and adds the star count information to it, finally joining them back together.

# annotates a readme with star count

def annotate_readme(repo_name) do

# if we don't find a readme, just return the error

with {:ok, readme} <- Github.readme(repo_name) do

readme

|> String.split("\n") # split the readme on newlines

|> Enum.map(&annotate_line/1) # annotate each line

|> Enum.join("\n") # join them back using newlines

end

end

Alright, Now that we have a basic implementation working, let's test it out with something real!

To start, we'll run it against one of the first awesome lists at https://github.com/sindresorhus/awesome. Let's also benchmark this so that we know how much time the whole process takes, which will help us understand the average baseline performance and help us quantify our improvements down the line. Benchmarking is one of the cornerstones of high-performance apps!

We can use the Erlang function :timer.tc to measure the time taken to annotate the whole README. :timer.tc returns the time taken to execute a given function in microseconds.

Here is a quick demo of :timer.tc

# sleep for 1000 milliseconds

iex(2)> :timer.tc fn -> :timer.sleep(1000) end

{1001125, :ok}

# :timer.tc returns the number of microseconds taken to execute the given function

Let's build a small function which prints the annotated README along with the time taken:

def annotate_with_time(repo_name) do

{us, {:ok, readme}} = :timer.tc(fn -> annotate_readme(repo_name) end)

IO.puts("""

# ------------------------------

# Time taken: #{us/1000} milliseconds

# ------------------------------

# README

# ------------------------------

#{readme}

""")

{us, readme}

end

Running it on a test repository gives us this:

iex(1)> AwesomeToolbox.annotate_with_time "minhajuddin/awesome_test"

# ------------------------------

# Time taken: 1180.181 milliseconds

# ------------------------------

# README

# ------------------------------

#

# Awesome Elixir lists

#

#

# ## Deployment

# Distillery https://github.com/bitwalker/distillery (2415 ⭐) https://github.com/bitwalker/exprotobuf

#

# [Elixir](https://github.com/elixir-lang/elixir) (15335 ⭐)

#

# [Broken Link](https://github.com/elixir-lang/elixir-foobar)

So, running this against a test README with 3 links took 1 second. Time for the real thing -- here we go!

iex(2)> AwesomeToolbox.annotate_with_time "sindresorhus/awesome"

# ------------------------------

# Time taken: 272182.367 milliseconds

# ------------------------------

# README

# ------------------------------

# ...

# ## Platforms

#

# - [Node.js](https://github.com/sindresorhus/awesome-nodejs (30680 ⭐)#readme) - Async non-blocking event-driven JavaScript runtime built on Chrome's V8 JavaScript engine.

# - [Cross-Platform](https://github.com/bcoe/awesome-cross-platform-nodejs (749 ⭐)#readme) - Writing cross-platform code on Node.js.

# - [Frontend Development](https://github.com/dypsilon/frontend-dev-bookmarks (25993 ⭐)#readme)

# # ..... after 50 links the repos are not being annotated

#

# - [JavaScript](https://github.com/sorrycc/awesome-javascript#readme)

# - [Promises](https://github.com/wbinnssmith/awesome-promises#readme)

This time, it took about 5 minutes to process our list, which contains about 500 links. Also, our function ended up annotating only about 50 links, which is strange!

We have no idea what's going wrong because we currently have no error-logging. Our code doesn't even check the HTTP status code of the responses, which ideally should have returned an error on non-200 responses.

Let's tweak our recv_response code to parse and return a lightweight Response struct which includes an HTTP status code. This will help us handle error responses which don't have a 200 status code.

Also, our current Github module does a lot of things, including managing the wrappers for the Mint HTTP logic. It's always a good idea to make modules cohesive and have them do only one thing, so while we are making changes, let's move the HTTP-related code to a separate HTTP module to make our Github module cleaner.

defmodule HTTP do

require Logger

def get(uri, headers \\ []), do: request("GET", uri, headers)

def request(method, uri, headers \\ [], body \\ [])

def request(method, uri, headers, body)

when is_list(headers) and method in ~w[GET POST PUT PATCH DELETE OPTIONS HEAD] and

is_binary(uri) do

request(method, URI.parse(uri), headers, body)

end

def request(method, uri = %URI{}, headers, body)

when is_list(headers) and method in ~w[GET POST PUT PATCH DELETE OPTIONS HEAD] do

Logger.info("#{method} #{uri}")

{:ok, conn} = Mint.HTTP.connect(scheme_atom(uri.scheme), uri.host, uri.port)

{:ok, conn, request_ref} = Mint.HTTP.request(conn, method, path(uri), headers, body)

{:ok, http_response = %HTTP.Response{}} = recv_response(conn, request_ref)

Mint.HTTP.close(conn)

{:ok, http_response}

end

defp recv_response(conn, request_ref, http_response \\ %HTTP.Response{}) do

receive do

message ->

{:ok, conn, mint_messages} = Mint.HTTP.stream(conn, message)

case HTTP.Response.parse(mint_messages, request_ref, http_response) do

{:ok, http_response = %HTTP.Response{complete?: true}} ->

{:ok, http_response}

{:ok, http_response} ->

recv_response(conn, request_ref, http_response)

error ->

error

end

end

end

# copied over from Mint

defp path(uri) do

IO.iodata_to_binary([

if(uri.path, do: uri.path, else: ["/"]),

if(uri.query, do: ["?" | uri.query], else: []),

if(uri.fragment, do: ["#" | uri.fragment], else: [])

])

end

defp scheme_atom("https"), do: :https

defp scheme_atom("http"), do: :http

defp scheme_atom(_), do: throw(:invalid_scheme)

end

defmodule HTTP.Response do

defstruct status_code: nil, headers: nil, body: [], complete?: false

def parse(mint_messages, request_ref, http_response \\ %__MODULE__{})

def parse(

[{:status, request_ref, status_code} | mint_messages],

request_ref,

http_response = %HTTP.Response{}

) do

parse(mint_messages, request_ref, %{http_response | status_code: status_code})

end

def parse(

[{:headers, request_ref, headers} | mint_messages],

request_ref,

http_response = %HTTP.Response{}

) do

parse(mint_messages, request_ref, %{http_response | headers: headers})

end

def parse(

[{:data, request_ref, data} | mint_messages],

request_ref,

http_response = %HTTP.Response{}

) do

parse(mint_messages, request_ref, %{http_response | body: [data | http_response.body]})

end

def parse(

[{:done, request_ref}],

request_ref,

http_response = %HTTP.Response{}

) do

{:ok, %{http_response | body: Enum.reverse(http_response.body), complete?: true}}

end

def parse([{_, mint_request_ref, _} | _], request_ref, _)

when mint_request_ref != request_ref,

do: {:error, :invalid_ref}

def parse([{_, mint_request_ref} | _], request_ref, _)

when mint_request_ref != request_ref,

do: {:error, :invalid_ref}

def parse([], _request_ref, http_response), do: {:ok, http_response}

end

This was a huge refactoring, but it allows us to simplify our code a lot. Moreover, it gives us an HTTP.Response struct that has a lot of useful information, like the response headers and the status codes.

iex> HTTP.get "https://api.github.com/zen"

{:ok,

%HTTP.Response{

body: ["Responsive is better than fast."],

headers: [

{"server", "GitHub.com"},

# ...

],

status_code: 200

}}

Alright! Now that we have the ability to detect failures, let's handle them properly. Let's fix our repo_info function to handle errors:

def repo_info(repo_name) do

with {:ok, %HTTP.Response{status_code: 200} = resp} <-

HTTP.get("https://api.github.com/repos/#{repo_name}", @default_headers),

{:ok, repo_info} <- Jason.decode(resp.body) do

{:ok, repo_info}

else

err -> {:error, err}

end

end

So, with the error handling set up, let's give it another go:

AwesomeToolbox.annotate_readme "sindresorhus/awesome"

This command runs fine for a bit, and then starts printing errors like the one below:

12:01:36.235 [info] GET https://api.github.com/repos/vorpaljs/awesome-vorpal

12:01:36.696 [error] REPO_INFO_ERROR: {:ok, %HTTP.Response{

body: ["{\"message\":\"API rate limit exceeded for ... }"],

complete?: true,

headers: [{"server", "GitHub.com"}, ...],

status_code: 403}}

This tells us that we are running into rate limits with GitHub! Now we know why only 50 lines were getting annotated with stats -- GitHub allows you to send just 50 requests per IP address per hour for unauthenticated requests.

The good news is that we can use an authentication token to get a higher limit of 5000 requests per hour, so let's tweak our Github.repo_info function to pass an authentication token with each HTTP request.

Github allows us to pass an authentication token using the Authorization HTTP header like so (the Github token can be manually generated from https://github.com/settings/tokens):

$ curl --header "Authorization: Bearer ${GITHUB_TOKEN}" https://api.github.com/repos/elixir-lang/elixir/readme

defmodule AwesomeToolbox.Github do

require Logger

@default_headers [{"authorization", "Bearer #{System.get_env("GITHUB_TOKEN")}"}]

# ...

def repo_info(repo_name) do

with {:ok, %HTTP.Response{status_code: 200} = resp} <-

HTTP.get("https://api.github.com/repos/#{repo_name}", @default_headers),

{:ok, repo_info} <- Jason.decode(resp.body) do

{:ok, repo_info}

else

err -> {:error, err}

end

end

end

Now we should be able to annotate the whole file:

iex> AwesomeToolbox.annotate_with_time "sindresorhus/awesome"

# ------------------------------

# Time taken: 272182.367 milliseconds

# ------------------------------

# README

# ------------------------------

# ...

This is awesome, we are finally able to annotate a README. You can check out all the changes required for this code on GitHub at awesome_toolbox/commit/39f8edd

So, annotating 500 Github repo URLs takes about 5 minutes, which is pretty slow. At this point, being an Elixir user, you might be tempted to retrieve these stats in a concurrent fashion. Elixir makes this so easy -- just use spawn and spin up 500 processes and be done with it, and Github can likely handle this spike.

However, what if the server that you're hitting is one of your own? Can it handle this kind of a spike? Also, this is just for one user -- if 10 users hit our app with 500 line awesome lists, our app would, in turn, hit Github with 5000 new connections/requests at the same time. This isn't how a good API consumer should behave -- we can do better!

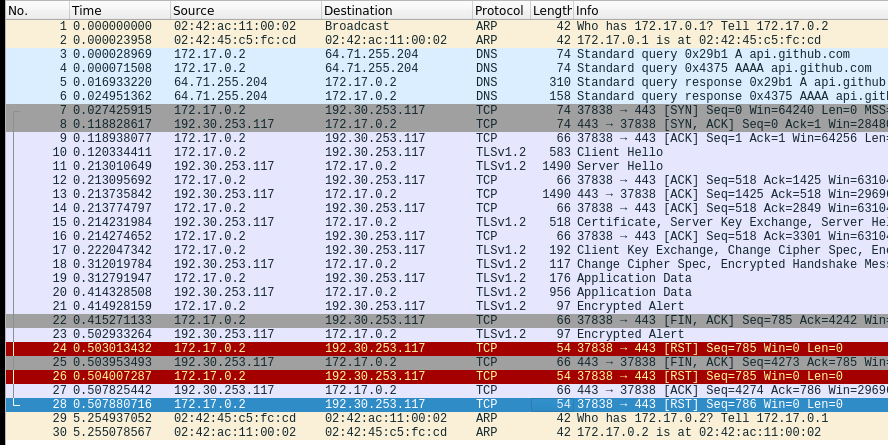

Let's also take a quick look at what making a single request entails on a TCP packet level. Sending a single HTTP request on a new connection to Github has the following packet exchange between the client and server (we are just tracking the TCP packets for this example):

curl https://api.github.com/zen

This packet exchange was captured using Wireshark (which is an awesome tool that is highly worth checking out). It allows us to inspect the actual network packets being sent from our computer to the network.

The packets numbered 7 through 28 are the packets which were exchanged to make 1 HTTP request. During this exchange, our client and server did not start exchanging actual data until packet #19 (which is 12 packets after the first TCP packet). We have this huge ceremony with which we set up a secure TCP connection (12 packets), and then we use it to just send 1 teeny-tiny message, after which we tear it down and start the ceremony all over again.

This is what your application does if you make HTTP requests without connection re-use.

We don't want to do that in our app, so let's start re-using our HTTP connections to make our code more efficient.

The right way to re-use connections is by using a connection pooling library like poolboy. However, for the sake of expediency, let's just use the process dictionary for a simpler solution. A process dictionary is also used by Ecto for database connections.

Every process has a process dictionary, which can be used to store data related to that particular process (it's similar to Thread.current if you are coming from ruby). We can use this process dictionary to store a connection and re-use it for successive calls.

A quick demo of the process dictionary:

Process.put(:answer, 42)

IO.puts "The answer is #{Process.get(:answer)}"

# => The answer is 42

The changes required to re-use HTTP connections in the HTTP module are:

def request(method, uri = %URI{}, headers, body)

when is_list(headers) and method in ~w[GET POST PUT PATCH DELETE OPTIONS HEAD] do

# TODO: spawn a new process per request so that the messages don't get intermingled

# get an existing connection if it is already available

conn = get_connection(uri)

Logger.info("#{method} #{uri}")

{:ok, conn, request_ref} = Mint.HTTP.request(conn, method, path(uri), headers, body)

{:ok, http_response = %HTTP.Response{}, conn} = recv_response(conn, request_ref)

# put this connection in the process dictionary to be reused later

put_connection(uri, conn)

{:ok, http_response}

end

# Put our connection in the process dictionary keyed on the hostname so that

# we don't reuse connections for different hosts.

defp put_connection(uri, conn) do

Process.put({:conn, uri.host}, conn)

end

# Get a connection if it was previously created, else create a new connection

defp get_connection(uri) do

conn = Process.get({:conn, uri.host})

if conn do

conn

else

Logger.info("creating HTTP connection to Github")

{:ok, conn} = Mint.HTTP.connect(scheme_atom(uri.scheme), uri.host, uri.port)

conn

end

end

We are now trying to retrieve a previous connection before making a request, and if the current request is the first request, we create a new connection. Once we are done with making a request and receiving a response, we are shoving the connection back to the process dictionary to be picked up later when another request is made.

Let's run our benchmark once again and see if this change impacted the execution time:

iex(2)> {us, readme} = AwesomeToolbox.annotate_with_time "sindresorhus/awesome"

# ------------------------------

# Time taken: 99400.432 milliseconds

# ------------------------------

# README

# ------------------------------

Woohooo! re-using HTTP connections brought down our processing time from 272.18 seconds to 99.4 seconds a two-thirds speed up(63%) just by reusing our HTTP connections.

Summary

- In this post, we started off by using parts of the code that were written in Part-01 to fetch the contents of the README and then retrieve all the repository stats using the same basic functions.

- We found that our HTTP wrapper needed proper logging and error handling to tell us that we were running into GitHub rate limiting, we also did a major refactoring to clean up our code into cohesive modules.

- And then we used GitHub's auth tokens and Authorization headers to bump our rate limits so that we could get all the information to annotate a README in one go.

- Finally, we looked at why throwing away TCP connections is so wasteful and how we could re-use HTTP connections using Mint and the process dictionary to cut the processing time from 5 minutes to 1.5 minutes.

Hope you found this post useful and that it drives you to write more performant and efficient code. The learnings from this post can be applied to any language (if you are from the ruby world and want to do something similar, take a look at excon).

In conclusion, make sure that you re-use your HTTP connections, don't just throw them away! As a developer, I didn't think a lot about all the nitty-gritty of writing efficient code in the past, and I am really grateful to the Elixir and Erlang community for teaching me about performance with this is a focus. I hope to share more of these insights with you in the future.

The changes for this patch can be found at awesome_toolbox/commit/bdb63d2b

Thank you Casey for the feedback on this post!