How Containerized Applications Increase Speed & Efficiency

Most modern applications today are being designed as a set of microservices with each service running as an independent application. This simply implies that one large application is broken down into small Apps running independently and only communicating with each other. This of course makes it much easier to build and maintain Apps but also offers way more value when combined with containerization technology. This article will cover the following topics:

- What is Containerization?

- Why Leading Companies Are Adopting Containerization

- Container Management Platforms

- Containerization vs. Virtualization

- Container Security

- Disadvantages of Application Containerization

What is Containerization?

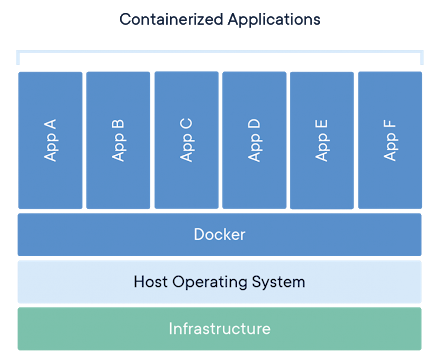

Containerization involves bundling an application together with all of its related dependencies, libraries, and configurations files required for it to run in an efficient and bug-free way across different computing environments.

An application together with all its related configuration files, libraries, and dependencies which may include other applications among other things are packaged in an isolated user-space called a container. We shall come to what user spaces are later on in this article. Containers use a shared operating system.

A container is a lightweight isolated package. A package of one executable/software or more.

This software is packaged together with its dependencies ranging from libraries, other executable, configuration files, etc all encapsulated and contained within a single unit. The package is portable and could run on any platform.

In a nutshell, a container is a fully packaged and portable computing environment. Everything an application needs to run is encapsulated and isolated in its container. The container itself is abstracted away from the host operating system with only access to the needed underlying resources. As a result, containers or containerized applications can be run on various computing environments without the need to configure the application for each environment. This is because unlike running an application in a virtual machine (VM) which would imply setting up a guest operating system on top of the host operating system, containers or containerized applications share the same host’s OS kernel. The kernel is a computer program at the core of the computer’s operating system.

Because of this high efficiency and flexibility, containerization is being used for packaging up individual microservices or distributed applications that make up modern applications.

How Does Containerization Technology Work?

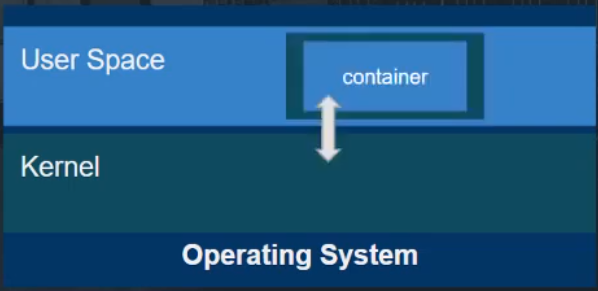

Each container runs on top of a host operating system. A host may run many containers. Containers run as processes with access to the host’s kernel.

A container engine, for example, the most popular Docker Engine is installed on top of a host operating system. This supports the formation of isolated user spaces within the host operating system userspace.

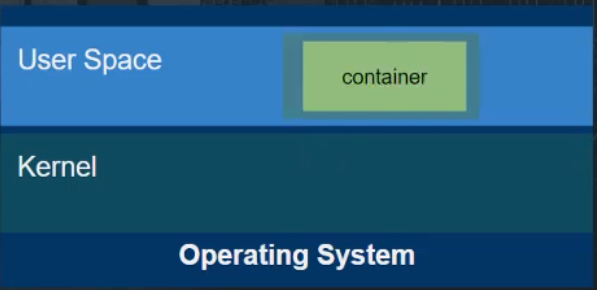

Userspace refers to all of the code in an operating system that lives outside of the kernel. This isolated user-space runs as a process in the host OS userspace, communicating directly to the kernel. This isolated user space is what is referred to as a container.

This makes it possible to run applications in isolation. Shipping containers are probably the best thing that happened to the shipping industry in the 21st century, and so is containerization for the software world. Containerization helped in the same way, providing better process isolation, a new era of standardization, and a convenient way for the cross-platform movement of software, to mention but a few.

The Difference Between Application Containers and System Containers

Does the difference between application containers and system containers really matter? Well.. depending on your preferences, here is why the difference might matter or not.

People usually refer to application containers when they talk about containers in this era of Docker. These refer to containers whose sole purpose is to run a single application inside an isolated environment.

Application containers are the primary use case for Docker, which is arguably the most used and popular containerization engine as of now.

System Containers

Containerization technology was originally designed as a way to containerize a full operating system (OS) rather than just a single app. A system container is thus a container that runs a full OS. Examples of system containers that have made it to the scene over the years include:

1. Solaris containers

Sun debuted these in 2005. These were intended mainly as a way to virtualize other Solaris systems on top of a Solaris host.

2. Virtuozzo’s OpenVZ

It has a history stretching back even further than that of Solaris. It was also designed as a container system platform.

3. Canonical’s new LXD container platform

This appeared on the scene in 2016. It came on the scene to offer yet another system container option.

Does the difference matter?

Companies with system container platforms claim to solve a different problem than one focused on by most application container platforms. But this difference seems less important given that the same application containers say Docker could as well be used to run full operating systems. Nothing is stopping one from just running a complete OS inside that Docker container. People just choose not to do it.

It’s worth noting that not until recently, containers had remained a niche technology until Docker came along and revolutionized how applications are built and shipped to production. In the past, the focus was majorly on system containers and few people were using containers in production.

Why Leading Companies Are Adopting Containerization

To appreciate containerization, one needs to take a walk down the memory lane to a time before containers.

Before containerization, when developers wanted to build an App, they would start by preparing their development environments by installing required libraries and modules to use, maybe using certain scripts and creating configuration files, etc. Everything would be installed with everything else on the machine. If one may want to work on another project, one would have to go through the same steps as above.

Later, after testing that everything was working just fine on the developers’ machines, developers would then push to production. Now, this is where most problems would start to showcase. The production environment would in most cases require different configurations for the deployed app to run plus there would be high chances of conflicts with the already installed applications on the production server.

To avoid all this, one had to go through the tedious tasks of managing packages and configurations, etc.

At this point, one had to manage different versions of different modules. The problem would even become worse when one tried running their apps on different platforms and operating systems.

The only way to achieve separation and encapsulate an application, as well as its environment, was to use virtual machines. But this still came at a performance penalty with most use cases virtual machines being overkill and providing more than what is actually needed.

Another issue was cluttering the machines with different overlapping setups. This used to cause conflicts between different versions of running programs or multiple instances of the same application using the same resource on the computer or other features of the application layer. Of course, starting, re-starting, and stopping applications, in general, was not fun. Also, when one wanted to move to a different production environment, they would have to set up and configure new environments again. This used to be lengthy and tedious, taking hours sometimes days to complete. This used to be the case before containerization.

Then here came the savior, containerization, providing a better way to build and ship applications. Among the benefits that drove and are still driving companies to adapt to this technology include the following:

1. Containers are Lightweight

Containers share the machine’s OS kernel and therefore do not require an OS per application, driving higher server efficiencies and reducing server and licensing costs. Containers sharing a single operating system kernel start-up in a few seconds instead of minutes required to start-up a virtual machine.

2. Better resource usage

Companies need to efficiently utilize the cloud resources they pay for, using containers, this can be easily achieved. Let's take an example of Docker containers running on a cloud server machine. When one container is not using resources such as CPU or Memory, these resources are redistributed to other containers in need of those resources. This gives a smooth experience to your users and greater value for the money spent on hosting space.

3. Easy to scale

Containers are scalable, have a small disk size, and are very easy to share.

As users of an application increase, to maintain a smooth experience for all users and ensure the application does not crash due to high traffic, scaling the application deployment is a must. With a containerized application, doing this is very easy as the only thing one will need is a copy of the container image to fire up multiple instances of the application. This is even much easier using an orchestration platform like Kubernetes.

4. Easier Management

Through orchestration, companies now don't have to worry about managing containers manually. Solutions such as Kubernetes make it even easier to manage containerized apps and services. It's now possible to automate continuous deployment and integration, rollbacks, orchestrate storage systems, perform load balancing, and restart failing containers.

5. Fault Isolation and Recovery

One important factor for opting for containerization is fault isolation. If one container fails, other containers running on the same operating system are not affected. Consequently, a container that has gone down can be fired back up without affecting other containers on the same system. This is because each container runs in its own user space isolated from the rest of the applications and containers on the system.

6. Portability

Containers are abstracted from the host operating system and as such, containers will run the same despite which computing environment they are deployed to given the environment supports the containerization technology on which the containers are built on. For example, Linux containers can be run on windows through Hyper-V isolation.

7. Lower costs than virtual machines

Rather than incurring the high costs that come with the deployment of multiple VMs, companies are opting for containers. A container does not require a full guest OS. This implies faster boot times, less resource usage, and better performance generally.

8. Accelerate workflows

Containerization allows for quick development, packaging, and deployment of applications across different operating systems.

Container Management Platforms

Among the challenges that developers or companies adopting to containerization face is the management of containers most especially when they grow in number. But thanks to the amazing developer community, a number of solutions already exist to tackle just this and even more provide some amazing extra features to further reduce the pain that used to exist before as regards building and shipping software to production. Below, I highlight some platforms that stand out.

Kubernetes

Kubernetes is an open-source container-orchestration system for automating computer application deployment, scaling, and management. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation.

It groups containers that make up an application into logical units for easy management and discovery. Kubernetes builds upon 15 years of experience of running production workloads at Google, combined with best-of-breed ideas and practices from the community.

Designed on the same principles that allow Google to run billions of containers a week, Kubernetes can scale without increasing your ops team.

Docker Swarm

Docker Swarm or simply Swarm is an open-source container orchestration platform and is the native clustering engine for and by Docker.

Any software, services, or tools that run with Docker containers run equally well in Swarm. Also, Swarm utilizes the same command line from Docker.

Docker swarm has most features present in most container management platforms like cluster management, scaling, load balancing, rolling updates to mention but a few.

If you’re looking for automation within a tool that can handle resource management, thus freeing up your DevOps team to work on developing solutions for the customer, using Kubernetes may be a better solution. While Docker’s tools allow for finer manual controls at the expense of some automation, when looking at the full integration, Kubernetes is the more popular option in that space.

Mesos from Apache

Apache Mesos is an open-source tool used to manage containers. It was developed at the University of California, Berkeley. Mesos began as a research project in the UC Berkeley RAD Lab by then Ph.D. students Benjamin Hindman, Andy Konwinski, and Matei Zaharia, as well as professor Ion Stoica.

Apache Mesos makes upgrading easy and non-Disruptive.

Apache works with both Docker and AppC while having APIs that allow for the development of distributed applications. This container manager’s software allows for the custom isolation of CPU, memory, ports, disk, and other resources. This allows for more flexibility when managing your containers and determining what resources can be shared amongst them.

Amazon ECS

Amazon Elastic Container Service (Amazon ECS) is a fully managed container orchestration service. Customers such as Duolingo, Samsung, GE, and Cook Pad use ECS to run their most sensitive and mission-critical applications because of its security, reliability, and scalability.

ECS is a great choice to run containers for several reasons. First, you can choose to run your ECS clusters using AWS Fargate, which is a serverless computer for containers. Fargate removes the need to provision and manage servers, lets you specify and pay for resources per application, and improves security through application isolation by design. Second, ECS is used extensively within Amazon to power services such as Amazon SageMaker, AWS Batch, Amazon Lex, and Amazon.com’s recommendation engine, ensuring ECS is tested extensively for security, reliability, and availability.

Google Kubernetes Engine (GKE)

GKE is an enterprise-grade platform for containerized applications. It is secure by default.

Google Kubernetes Engine provides a managed environment for deploying, managing, and scaling your containerized applications using Google infrastructure. The GKE environment consists of multiple machines (specifically, Compute Engine instances) grouped together to form a cluster

Using GKE, some features like four-way auto-scaling and no-stress management, optimized GPU and TPU provisioning, integrated developer tools, and multi-cluster support are available out of the box. GKE clusters can be started just through a single click.

They eliminate operational overhead with auto-repair, auto-upgrade, and release channels, including vulnerability scanning of container images, data encryption, and integrated Cloud Monitoring with infrastructure. Speed up app development without sacrificing security.

Red Hat OpenShift

They refer to themselves as “A hybrid cloud, enterprise Kubernetes platform to build and deliver better applications faster”. Red Hat OpenShift offers automated installation, upgrades, and lifecycle management throughout the container stack—the operating system, Kubernetes and cluster services, and applications—on any cloud. Red Hat OpenShift helps teams build with speed, agility, confidence, and choice.

OpenShift is a family of containerization software developed by Red Hat. Its flagship product is the OpenShift Container Platform—an on-premises platform as a service built around Docker containers orchestrated and managed by Kubernetes on a foundation of Red Hat Enterprise Linux. OpenShift helps one develop and deploy applications to one or more hosts. These can be public-facing web applications, or backend applications, including microservices or databases

Google Cloud Run

Cloud Run is a managed computing platform that enables you to run stateless containers that are invocable via web requests or Pub/Sub events. Cloud Run is serverless: it abstracts away all infrastructure management, so you can focus on what matters most — building great applications.

Containerization vs. Virtualization

Virtual machines have been a go-to solution for a while now. Not long ago, when a software developer wanted to build and deploy a stand-alone piece of software that requires a specific environment to be configured in a certain way, virtualization helped to achieve that of course with the benefits of isolation and portability and among other things developers needed.

With virtual machines, what actually happens is that the software is packaged together with the whole operating system and even though this sounds practical and feasible, more often than not using a Virtual Machine requires more resources than we actually need. A Virtual Machine takes up all memory that is available to it even if the program to be packaged or executed requires much less memory.

Imagine you wanted to package a simple microservice, let's say an HTTP server that sends a message when it receives a request. Using Virtualization, this simple microservice would be encapsulated in a whole operating system. This approach is expensive and barely feasible. With containers, however, we achieve the same benefits at a much lower cost.

How? An operating system is divided into two main parts, the user space, and the kernel space.

The userspace contains applications that are used by the user and other applications such as GUI apps, browsers, command-line tools, and essentially all applications that are beyond the kernel responsibility. When a program is executed, a new process gets created in the userspace. It makes calls to the kernel and all programs run in a similar way in the userspace. Now containers are also a process created in the user space but this process is different. A container is a fully-fledged contained user space in itself. It in turn contains the program to be packaged along with its dependencies. So when we create a container, what we are actually creating is a user-space that contains all the programs that the main software that we want to ship depends on. So that’s why containers are extremely lightweight as compared to virtual machines. This means managing containers is more efficient and quicker. Containers also consume much less computing power than virtual machines.

Both technologies abstract resources and are used to package and ship isolated stand-alone software. The difference is in the level of abstraction and how they achieve isolation, security, and all the features they provide. When it comes to virtualization, software and Apps get to use all the operating system resources because in this case, what we package and ship is a whole operating system with its own kernel. Containers however share the same kernel and require relatively few resources as compared to virtual machines.

The promise behind containers is, instead of shipping the whole operating system with the software and its dependencies, we simply package code and its dependencies into a container, and from then on we can ship these containers and distribute them so easily. The only thing that is needed is one operating system.

Container Security

Containers make it easy to build, package, and promote an application or service, and all its dependencies, throughout its entire lifecycle and across different environments and deployment targets. With the flexibility provided by containers, security must not be left out of the picture.

Container security is the protection of the integrity of containers. This includes everything from the applications they hold to the infrastructure they rely on.

So are containers even secure?

Containers themselves are actually a security tool. Containers offer even more methods for securing one’s applications. From improving application isolation, providing faster and safer mechanisms for software patching better than traditional systems like VMs to having additional capabilities and processes built-in.

Recently, some research has been done about the security comparison between containers and Virtual Machines. It used to be the idea that a virtual machine was very secure because there was the hypervisor doing isolation and containers not so secure because they all share the same operating system but now, because of the improvements in the Linux C groups (Control Groups), container isolation has gotten to the point that it approaches Virtual Machine isolation.

So now with containers, we get security, much better resource use, and also file system re-use, one of the best benefits of containers.

Disadvantages of Application Containerization

Despite all the advantages of running containerized applications, it's important to understand some of the limitations involved. The following are some disadvantages associated with application containerization.

1. Dealing with persistent data storage is complicated.

All data inside a container disappears forever when a container shuts down. Though they are ways to save data persistently, it's still a challenge that is yet to be addressed seamlessly.

2. Containers don’t run at bare-metal speeds.

Despite efficiently consuming resources than VMs, containers still meet performance overhead due to interfacing the host OS and so on. If 100 percent bare-metal performance is what is needed, then one needs bare-metal not containers.

3. Containers are not ideal for graphical applications.

Containerization was designed as a solution for deploying cloud applications that don’t require graphical interfaces. Though they are notable efforts (such as XII video forwarding) that can be used to run a GUI app in a container, these solutions are clunky at best.

4. Not every application will benefit from containers.

Containerization is ideal for applications designed to run as a set of microservices. Otherwise, the only real benefit of containerization is simplifying application delivery by providing an easy packaging mechanism.

Containers are Here to Stay (at least for now)

More companies are adopting containers and experiencing drastic improvements in not only their DevOps workflows but also overall productivity. Given that, containers are here to stay for quite some time.

A study by Forrester found that 66 percent of organizations who adopted containers experienced accelerated developer efficiency. The report also revealed that 75 percent of companies achieved a moderate to a significant increase in application deployment speed. So what does this exactly mean for the future of containerization?