What is good memory management in Ruby? Besides the language’s baked-in features (like its advanced garbage collection and heap paging mechanism) this also means sensible coding, leveraging mature memory profiling tools – and, of course, using the best monitoring tools and practices.

At Scout, we’re keenly aware of all of the above (and naturally, we’re especially conscious of that last point — and just how often monitoring can be the deciding factor that saves that day).

That said, in order to get to the point where you’re sure your monitoring is efficient and making the most impact, it’s essential to have an overall grasp on how memory works in Ruby.

So, in this 3 part series, we’ll tackle all of the above! You’ll get all of the following:

- Part 1: a primer on memory in Ruby

- Part 2: common memory issues and causes: memory fragmentation, memory leaks, and memory bloat

- Part 3: Avoiding and solving memory issues, with a focus on effective Ruby memory monitoring as a complementary skill

Let’s kick things off by getting an overview of how memory functions in the world of Ruby, memory allocation at system and kernel level, and garbage collection.

🐕 Once you grasp how Ruby allocates memory, you’ll be better prepared to recognize when things go wrong. This basic knowledge will also help you make sense of what tools like Scout are showing you!

How Memory Works in Ruby

In Ruby, memory primarily relies on the Ruby runtime, the host operating system, and the system kernel. Additionally, the runtime garbage collection also plays an important role in determining how memory is managed and recycled.

Ruby organizes objects into segments called heap pages.

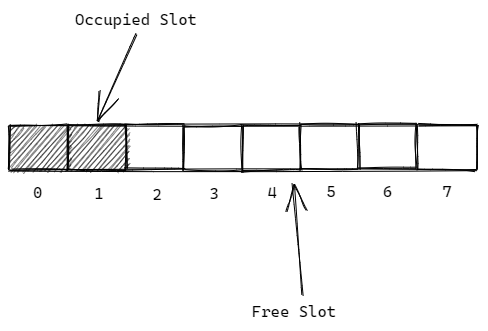

The heap space is divided into used heap pages and empty heap pages. These heap pages are split into equal-sized slots, allowing one object each, irrespective of data type.

These slots can be free or occupied, and when Ruby is looking to allocate a new object a slot, it looks for a free one. If a slot can’t be found, Ruby allocates a new heap page. Then, when an object is garbage collected, the memory it occupied is returned to the pool of available heap pages for future allocation.

Slots are quite small locations within memory, only around 40 bytes each. Obviously, this won’t suit all kinds of data. To solve this, Ruby keeps any overflowing information in a different location outside the heap page, and stores a pointer in the slot that can be used to locate the external information.

All memory allocation in the Ruby runtime, including heap pages and external data managed by pointers, is handled by the system’s memory allocator. To get a better understanding of how Ruby manages memory under the hood, it’s a good idea to take a quick tour and examine how the underlying operating system plays a role in this process. Let’s do that next.

Memory allocation at the operating system and kernel level

Higher-level memory allocation calls are actually handled by the host operating system’s memory allocator. Even though this is generally abstracted away in Ruby, the effects of this can still manifest as the performance issues and memory problems that developers will need to monitor, identify and troubleshoot. Generally, this allocation involves a collection of C functions (in particular, malloc, calloc, realloc, and free), so let’s quickly run through each.

malloc stands for “memory allocation”. It takes the memory size to be allocated as a parameter, finds some empty RAM, and returns a pointer to the starting index of the allocated memory. That said, it’s totally up to you to make sure that you keep to the size you’ve been allocated–or else unexpected things are bound to happen.

calloc stands for “contiguous allocation” and allows for allocating consecutive blocks of memory at the same time. Doing this really comes in handy when working with arrays of variables with well-known unit sizes. This function also returns a pointer to the starting index of the allocated memory.

realloc stands for “re-allocation”, and it’s used to re-allocate previously allocated memory, but with a new size. However, this requires that you estimate the needed memory size initially and adjust it dynamically as your program runs.

Finally, the aptly named free function is used to clear out any pre-allocated set of memory locations. It takes in a pointer to the starting index of the memory location that has to be freed up.

At a lower level, the actual memory allocation is handled by the system kernel, which allocates memory in fixed-size chunks called pages (typically 4 KB). It’s important to note this because frequent allocations or inefficient memory usage can result in unnecessary kernel calls, something that is expensive in terms of performance.

Let’s also note that, to minimize these calls, memory allocators typically request more memory than immediately needed, holding on to extra space for future allocations. Awareness of this mechanism could come in handy for later when diagnosing actual memory issues and expected behavior.

Scout helps track these patterns by providing memory allocation breakdowns and timeline views that make it easier to spot when these low-level memory operations (which are normally abstracted away) might be causing problems. For more info, check this section of our docs.

Ruby and garbage collection

The garbage collection scheme that a runtime uses greatly affects how well available memory is utilized by the environment. Luckily, Ruby’s garbage collection system is quite advanced. It utilizes all of the aforementioned calls to ensure that the application has access to the appropriate amount of memory to carry out its activities properly – and the system has continued to improve over time.

As an example, one interesting thing to note is that at one point in the past, Ruby actually stopped the entire application to clean the memory! Yes, you read that right. The application is halted to carry out garbage collection. This was done to ensure that no objects allocated any memory while garbage was being collected, which also necessitated super fast garbage collection for top quality application performance. Nowadays, while Ruby still pauses briefly for garbage collection, especially for certain types of memory management tasks, it no longer completely halts the application in the way it used to. These optimizations make garbage collection much less disruptive for modern Ruby applications.

We have a lot more ground to cover, but if you’re interested in a deep dive, this series from Jemma Issroff is a great resource to check out.

Making good monitoring memories

Let’s put this in context of our ultimate goal, more effective monitoring for our Ruby applications. In short, understanding the underlying memory mechanisms helps you interpret what you’re seeing in your dashboards.

Now that we’ve gone through the basics of memory, what about where things go wrong? This issue will be at the top of mind throughout the next of this series, where we’ll tackle common memory issues and their causes, and it will bubble to the forefront in the final segment as we discuss monitoring, identifying, and preventing these memory issues.

And one last note from us: while understanding Ruby’s memory system is a great idea to gain mastery with the language, actually dealing with this manually is challenging. Scout will help monitor memory usage in real time, offering practical insights without the need to track everything yourself. So, check out your options for Scout (including our free plan) and our guide to getting up and running in 3 minutes in Ruby – and be sure to join our Discord community!