A Comprehensive Guide to migrating from Python 2(Legacy) to Python 3

Overview

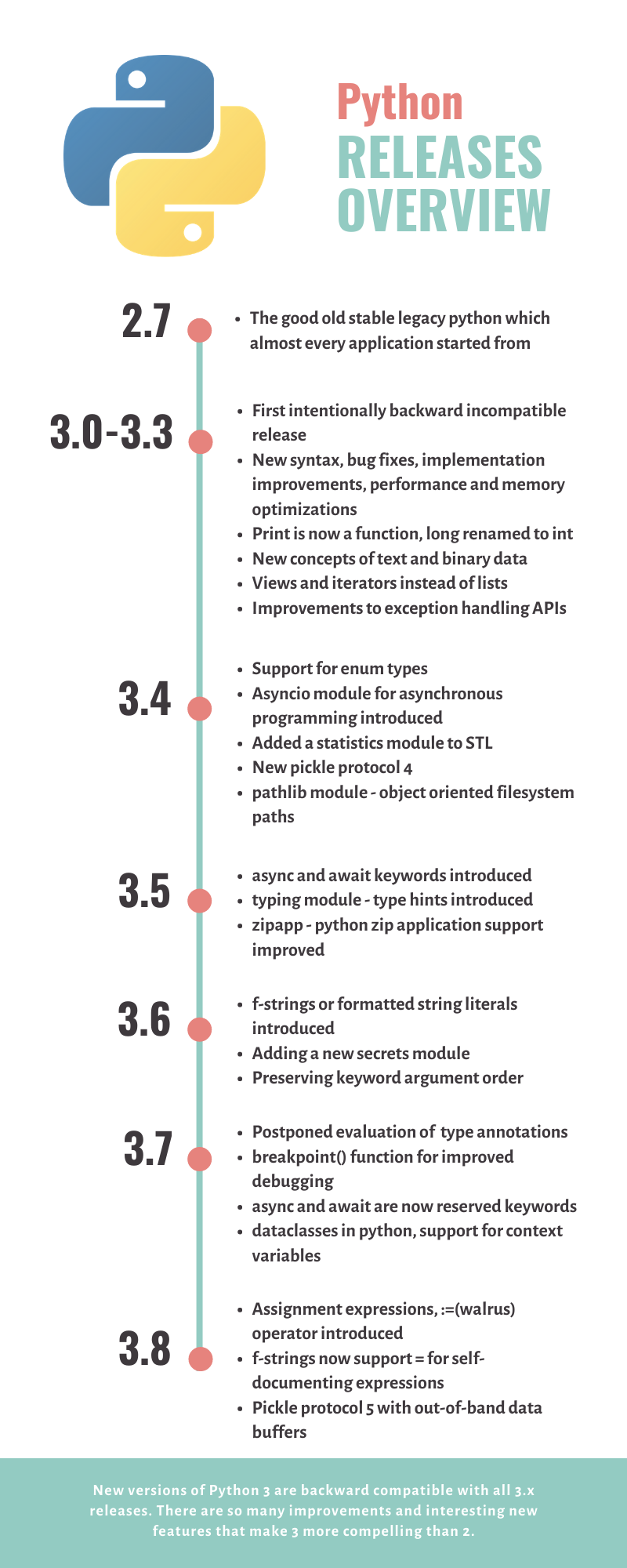

Python powers many applications we use day-to-day like Reddit, Instagram, Dropbox, Spotify and so on. Adoption of Python 3 has been a subject of debate in the Python community for long. While Python 3 has been out for more than a decade now, but there wasn’t much incentive to migrate from the stable Python 2.7 in the earlier releases.

If you’re still running on legacy python, it’s high time to migrate as it reaches end of it’s life from Jan 2020. If that is not enough motivation or you have too much in place for Python 2.7 in your code, read on.

In this article, we’ll discuss —

- Why you should care about the migration to Python 3?

- New and lucrative features of Python 3 which can take performance and developer productivity significantly up

- Concrete steps and strategies you could follow if you were to migrate a huge code-base running on Python 2

- Automated tools to help migrate — 2to3, Python-Future and Modernize with examples

- Some learning and gotchas involved in the process through the migration stories of Instagram, Dropbox and Facebook which are running Python 3 on the scale of a billion users.

Why invest in upgrading?

“I think Python 3 is actually is a better programming language than Python 2 was. I think that it resolves a lot of inconsistencies” — Glyph Lefkowitz, founder of Twisted — a popular networking engine written in Python

Python 2.7 was an LTS(long term support) release of Python so most of the users didn’t have to worry about porting their code every 18 months which could be a huge investment. Moreover, the migration process isn’t very straightforward, specially for the bigger code-bases where no single person has context of all the parts of software.

It cannot be fully automated through tools or packages, one has to manually intervene at some places. This is where having good understanding and unit test coverage helps, so that you can identify the input and expected output from a function and refactor it with assurance.

That being said, here are two important reasons to why an investment in migration could be productive for you —

Developer Productivity

One of the big reasons of Python’s popularity is that it is easy to learn and write code in. It is dynamically typed and doesn’t enforce strict type checking. This can become complicated as your code scales. Let’s check out an example.

def get_category_id_from_cities(name, cities_map):

"""

Input:

name - (str)

cities_map - (dict)

Returns:

category_id - (int)

"""This is a simple function that takes in two arguments, does some operation and returns an output. There’s a nice doc string added by developer which tells you the type of parameters it is expecting and the return type.

However, this piece of function is hard to scale. Without a standard type system in place, it is easy introduce new inputs arguments, modify the types and comment can easily go out of sync with the implementation overtime.

Most of the teams facing this issue use a popular python package mypy for optional type checking. Python 3.5 introduced type annotations(PEP 484) as a standard system for the same.

The above piece of function would look like this with type hints —

from typing import Dict

def get_category_id_from_cities(name: str, cities_map: dict[str, int]) -> int:

// Do somethingThese type hints are completely optional i.e. They do not enforce static type checking and are completely ignored at runtime. Including them into your code makes it self-documenting, easy to understand and modify. This in-turn increases developer velocity. We’ll discuss type annotations in detail in the next section.

Performance Improvements

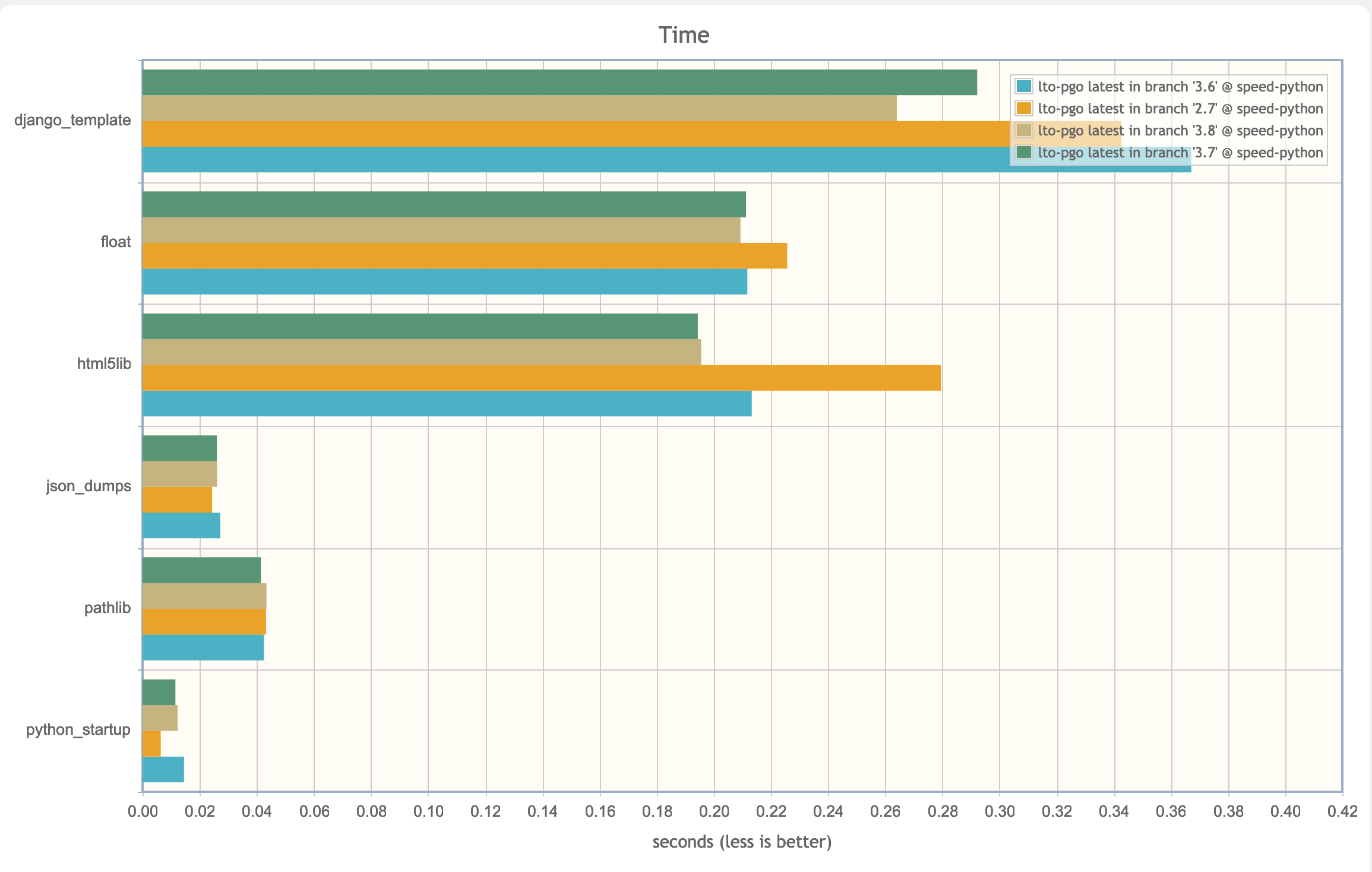

Here’s a screenshot from speed.python.org which is an official performance benchmarking tool for Python. This graph lists some common application operations on different Python versions.

You’ll realize that Python 3.7 and 3.8 are fastest yet for almost all operations except for startup time than Python 2.7. For most applications, a few millisecond difference of startup time wouldn’t matter.

Python 3 has improved on many CPython implementations over the time, moved and re-implementated standard library capabilities in C giving significant performance boost to common utilities.

More than these common improvements, Python 3 introduced asyncio module in 3.4 release which enables asynchronous programming. What it simply means is that while your current request is waiting on an I/O operation, you can use that time to serve another request.

With all this in mind, the debate of Python 2 vs 3 can be solved by simply saying after the migration, your code will use half the memory and run twice as fast. According to Instagram’s Pycon 2017 Keynote, after moving to Python 3, they reported a 12% CPU and 30% memory utilization win on their uwsgi/Django and Celery(async) tier respectively.

Python community is moving continuously towards improving 3, there’s no good reason to stay on 2 now, the delay in migration is the delay on missing out huge performance improvements and awesome new features. One of the most popular frameworks — Django, has dropped the support of Python 2 entirely(Django 2.0) and here’s a full list of projects who have pledged for dropping support of Python 2 from 2020.

What’s new in Python 3?

This section will detail some of the new features introduced in Python 3(aggregated till 3.8) with examples. Most of the syntax and type changes can be taken care of by using automated tools like 2 to 3, modernize etc. discussed in the next section. However, there are some gotchas, specially when handling strings which might require you to understand the code before making the compatible change.

New Syntax Features

1. Print statement — print is now a function, not a statement.

2. Walrus(:=) Operator assigns values to variables as part of a larger expression. Let’s look at this random piece of code in Python 2 and how it’s readability could be improved using assignment expressions in Python 3.

3. Matrix Multiplication Operator(@) was introduced in 3.5 as Python advances towards making language better for scientific computations. Stating from Python 3.5 release documentation — currently, no builtin Python types implement the new operator. It can be implemented by defining __matmul__(), __rmatmul__(), and __imatmul__() for regular, reflected, and in-place matrix multiplication.

You could also use Numpy > 1.10 which supports the new @ operator. Let’s checkout an example implementation of the same.

class Matrix(object):

def __init__(self, matrix_values):

self.matrix_values = matrix_values

def __matmul__(self, m2):

"""

https://docs.python.org/3/reference/datamodel.html#object.__matmul__

"""

return Matrix(Matrix._multiply(self.matrix_values, m2.matrix_values))

def __rmatmul__(self, m1):

"""

https://docs.python.org/3/reference/datamodel.html#object.__rmatmul__

"""

return Matrix(Matrix._multiply(m1.matrix_values, self.matrix_values))

def __imatmul__(self, m2):

"""

https://docs.python.org/3/reference/datamodel.html#object.__imatmul__

"""

return self.__matmul__(m2)

@staticmethod

def _multiply(m1, m2):

return [[sum(m1 * m2

for m1, m2 in zip(m1_row, m2_col))

for m2_col in zip(*m2)]

for m1_row in m1]

if __name__ == '__main__':

m1 = Matrix([[12, 34], [4, 2]])

m2 = Matrix([[2, 24], [12, 4]])

m1 @ m2 // Invokes the __matmul__ method

m1 @= m2 // Invokes the __imatmul__ method4. breakpoint() function was introduced in 3.7. It is just an easy way to enter Python debugger(pdb). It calls sys.breakpointhook() which in-turn imports pdb and calls pdb.set_trace().

You could also set an environment variable PYTHONBREAKPOINT to enter the debugger of your choice.

Changes to Core Types

Understanding the new Strings

One of the major incompatible changes is the way strings are now handled in Python 3 and you’ll probably spend most time fixing strings in your Python 2 code.

Let’s first understand the two uses or types of strings —

Text or normal strings — the human readable form used to display a sequence of characters on a webpage or application. It can have any kind of characters, currency symbols, emojis, alphabets from different languages and so on.

Data or Bytes Data — The binary encoding of normal strings which is suitable to be processed by machines. It is a sequence of bits which is used while writing data to the disk, or transmitting it over a network and so on.

Unicode

Let’s talk a bit about Unicode. Unicode is an attempt to map every character or symbol known to humans to a codepoint. It is not an encoding. A codepoint is simply an hexa-decimal representation of the character which can then be encoded to binary using any encoding scheme like UTF-8, UTF-16 etc.

Now in Python 2, a single type str was used to represent the text as well as binary data. Default encoding was 7-bit ASCII. If you needed to support Unicode characters, there was a separate type for it called unicode. Python 2 allowed mixing of these two different types by implicitly casting the strings, but it’d cause problems at runtime.

In Python 3, str and bytes types are explicitly different and the mixing of these is not allowed. unicode type in Python 2 corresponds to str type in Python 3. All the string literals in Python 3 are now Unicode, be it single, double or triple quote docstrings. Also, UTF-8 is default encoding in Python 3.

Here are some simple examples of string concatenation differences in Python 2.7 and 3.7. Notice that we could use encode() and decode() functions to handle the type conversion specifically.

Let’s see one more example to how Python 2 handles non ASCII characters and how to avoid a common pitfall of string manipulation.

In the above example, when we try to format a non-ASCII character(£) in second step, Python 2 garbles it to hexadecimal value, while Python 3 can handle it perfectly fine.

When you try to convert it to a unicode string on the next step for fixing, you see the dreaded UnicodeDecodeError. You’d be hitting it very often if your application is playing with strings. The simple solution is to use literal u in front of every such string.

File I/O

In Python 2, file opened using open() is being read as general str type. While in Python 3, you have to specify the mode to open the file. The default is the text type. It is a common pitfall as now you cannot treat the files which are not encoded using UTF-8(PNG, JPG etc.) and expect them to be read as Unicode text.

Changes to the Dictionary type

There have been three major changes to dictionaries in Python 3. Let’s explore them one by one.

Views/Iterators instead of lists

The support of functions iteritems(), iterkeys() and itervalues() has been removed and now items(), keys() and values() return views instead of lists.

Checking existence of a key in dict

dict.has_key() is no more. It has been removed in support of in operator. Whether a key exists in a dict or not can be simply checked by key in my_dict.

Ordering of keys in a dict

In Python 2, order of elements in a dictionary remained same with every execution. This exposed a security vulnerability in Python 2(CVE-2012-1150) for DOS(Denial of Service) attacks as you could predict the order of elements.

Your code could be relying on this behavior of Python 2. Python 2.6.8 introduced an environment variable $PYTHONHASHSEED which when set to random, overcomes this security vulnerability by randomizing the hash function. This behavior is on by default beyond Python 3.3.

In Python 3.6, dictionaries were re-implemented to consume 20–25% less memory than Python 3.5 and suggested that keys will be preserved by their insertion order. However, this behavior can only be relied upon beyond Python 3.7.

Changes to the Numeric Types

long and int type

long type in Python 2 has been renamed to int in Python 3. int will handle the large values automatically. In Python 2, if the int overflowed because of some operation, it was implicitly converted to long type.

If your application contains the code which relies on distinction of int and long from Python 2, it’ll need to be fixed. Automatic conversions won’t be able to take care of this logic.

Division / Operator

In Python 2, / operator returned the floor value while dividing two integers and a float if any of the operands is float. Python 3 returns float value in both the cases.

There are no changes to the behavior of floor // division operator. So, you could change your Python 2 code to use // where floor division is specifically required.

New Modules and STL Re-organization

This section will cover some new and interesting things introduced in Python 3.

f-strings(PEP 498)

f-strings are a lot cleaner and easier way to format strings than the traditional %formatting or the str.format() function. Stating from the documentation of PEP-498 —

F-strings provide a way to embed expressions inside string literals, using a minimal syntax. It should be noted that an f-string is really an expression evaluated at run time, not a constant value. In Python source code, an f-string is a literal string, prefixed with ‘f’, which contains expressions inside braces. The expressions are replaced with their values.

Also, f-strings are faster than both the traditional formatting approaches. Since it’s a way to embed all kind of python expressions, you can actually make function calls within the string. Let’s see f-strings in action with some examples —

Python 3.7

>>> # Expression evaluations

>>> name = "Google"

>>> f"You could {name} this."

'You could Google this.'

>>> f"{5 * 5}"

'25'

>>> # function call within string

>>> name = "GOOGLE"

>>> f"You could {name.lower()}"

'You could google'

>>> # Multiline formatting example

>>> name_1 = "Google"

>>> name_2 = "Microsoft"

>>> name_3 = "Facebook"

>>>

>>> (f"Here's some top "

... f"tech companies of "

... f"the world - {name_1}, "

... f"{name_2} and {name_3}.") # Note that there's an 'f' in front of every line

"Here's some top tech companies of the world - Google, Microsoft and Facebook."f-strings also allow specifying the conversion type with !r, !s and !a which call the repr(), str() and ascii() on the expression. Here’s a simple example of the same —

class Avengers(object):

def __init__(self, superhero_name, actual_name):

self.superhero_name = superhero_name

self.actual_name = actual_name

def __repr__(self):

return f"{self.actual_name} is the {self.superhero_name}"

>>> ironman = Avengers("Ironman", "Tony Stark")

>>> f"ironman!r"

>>> 'Tony Stark is the ironman'Type Hints(Type Annotations)

Type Hints were introduced with typingmodule in PEP 484. We’ve already talked about what type hints are and their benefits in long run. They make refactors much easier by preventing common bugs, improve readability of code and promote intellisense(Intelligent code completion).

Type Annotations are invaluable additions which requires somewhat extra development efforts in the beginning, just like documentation and writing tests, but are justified in the long run.

Let’s take a look at some basic type hinting examples from this gist. There are comments to explain the different cases.

# 1. The hello world of type hinting

def greeting(name: str) -> str:

return f"Hello {name}"

# 2. Type hinting a complex type

from typing import Dict, List

class Node:

pass

def get_node(Dict(str, List[Node]):

# Do something

# 3. Type Aliases

from typing import Tuple

Node = Tuple[int, str]

def pop_node(node: Node) -> Node:

pass

# 4. Type hinting Callables can be done by using Callable[[arg_1, arg_2, arg_X], ReturnType]

from typing import Callable

def async_query(on_success: Callable[[int, str], str],

on_failure: Callable[[int, str], None]):

pass

# 5. You could use Any as a special type assignable to and from any type.

# Similarly None can be used for specifying type(None)

def delete_elements(list: List[Any]) -> None:

passSome important things to note about type hints —

- There’s no type checking at runtime and type hints are not mandatory. Python still remains a dynamically typed language. You could still pass an

intto a function expectingstrand shoot yourself in the foot. Then what’s the use? - You could use mypy or implement runtime type checking functionality in your code by using decorators or metaclasses. The

typingmodule providesget_type_hints()function for the same. This is specially helpful for IDEs(Pycharm, VS Code) which can improve their intellisense based on type hints. - If you change the implementation of the function without changing types in it’s comments, nothing happens. But, with type checkers or linters in place, if you don’t change the type hints, they will probably yell at you.

Asyncio(Asynchronous I/O)

Introduced in PEP 3156, asyncio is a way to write concurrent code using the async/await syntax. Let’s talk a bit about why it is needed.

Network I/O takes time. If your application is doing a lot of it, you’d probably have much better results utilizing the time it takes to receive a response from server or microservice to cater other pending requests. Even if you think that your network is fast, for the code, it is a super slow process.

Take a look at this screenshot from a popular Github gist comparing the latency numbers of different operations that every programmer should know.

Now to compare these on a humanized scale, these durations are multiplied by a billion. For accessing data from CPU memory on this scale, it takes a heartbeat, for reading something from SSD, it takes 2 days and to send a datapacket within same datacenter over the network, it takes around six days.

Now imagine what all a human could do for those 6 days without waiting on the response. A network call is of the same magnitude for a piece of code.

This is where we need asynchronous I/O. It doesn’t mean making a single request run faster, but to enable a server to serve thousands of requests at a time as swiftly as possible.

Quoting from the documentation —

asyncio provides a set of high-level APIs to

- run Python coroutines concurrently and have full control over their execution

- perform network IO and IPC

- control subprocesses

- distribute tasks via queues

- synchronize concurrent code

Asynchronous programming is a big concept and out of scope for this article to explain in detail. Let’s look into one interesting example in which we’ll analyse the performance of popular requests library vs aiohttp with asyncio.

Here’s the gist which makes requests to a dummy API server’s different endpoints, once sequentially using requests and then using aiohttp in parallel. Let’s look at the results —

On an average, for multiple runs, aiohttp with asyncio is 5–7x faster than making sequential requests.

That being said, if you take a look at the code of making asynchronous calls, it is much more complex than using requests. This is a tradeoff you need to keep in mind, using asyncio only when needed because it increases code complexity and debugging could be an another challenge in async code.

Data Classes

In simple words, dataclasses simplify the boilerplate overhead when you create data classes aka models to represent the states and do operations on your data.

They implement the regular ‘dunder’ methods like __init__, __repr__, __hash__, __eq__ and so on automatically. Let’s take an example to see dataclasses introduced in Python 3.7(PEP-557) in action —

# Regular Data Class definition

class Order(object):

order_id: int

order_state: str

total_cost: float

def __init__(self, order_id, order_state, total_cost):

self.order_id = order_id

self.order_state = order_state

self.total_cost = total_cost

def __repr__(self) -> str:

return (f"Order Information for {self.order_id} -"

f"Order State = {self.order_state}, Total Cost = {self.total_cost}")

def __eq__(self, other) -> bool:

if not isinstance(other, Order):

return NotImplemented

return (

(self.order_id, self.order_state, self.total_cost) ==

(other.order_id, other.order_state, other.total_cost))

def __hash__(self) -> int:

return hash((self.order_id, self.order_state, self.total_cost))

# ------------------------------------------------------------------------ #

# With @dataclass decorator introduced in Python 3.7 all of this reduces to

from dataclasses import dataclass

@dataclass(unsafe_hash=True)

class Order(object):

order_id: int

order_state: str

total_cost: float

# Dataclass decorator will automatically generate all the dunder methods

# It can also generate comparision methods and handle immutability

Exception Handling API Updates

There are several major updates to the way exceptions are now handled in Python 3. New powerful features and a lot cleaner way for raising and catching exceptions have been added. Here are a few important ones —

- Python 3 enforces that all exceptions be derived from

BaseExceptionwhich is the root of Exception hierarchy. Although this practice isn’t new, it was never enforced. - All the exceptions to be used with

exceptshould inherit fromExceptionclass.BaseExceptionto be used as base class only for exceptions that should be handled at top-level likeSystemExitorKeyboardInterrupt - You must now use

raise Exception(args)rather thanraise Exception, arg— PEP-3109 StandardErrorno longer exists.- Exceptions cannot be iterated anymore. They do not behave like sequences.

argskeyword is to be used to access the arguments. - Python 2 allowed catching exceptions with the syntax like

except TypeError, errororexcept TypeError as error. Python 3 has dropped support for the former(PEP-3110). This was done because of a common mistake developers did to catch multiple exceptions like this —

try:

# do something

except TypeError, ValueError:

pass

- This piece of code wouldn’t ever catch

ValueError, it’ll catchTypeErrorand assign the object to “ValueError” variable. The correct way would be to use tuple likeexcept (TypeError, ValueError). - When you assign exception to a variable with

except TypeError as e, the scope of variable ends atexceptblock. From the documentation —

This is as if —

except E as N:

foo

was translated to

except E as N:

try:

foo

finally:

del N

- This means the exception must be assigned to a different name to be able to refer to it after the except clause.

- Exception objects now store the exception traceback in

__traceback__attribute(PEP-3134). All the information pertaining to an exception is now present in the object. PEP-3134 also had a major overhaul to Exception Chaining. A detailed discussion on that will go beyond the context of this article so it is not covered here.

Pickling Protocols

pickle is a popular Python module known for serialization and deserialization of complex python object structures. Serialization is the process of transforming objects into a byte stream suitable to store on a disk or to be transmitted over a network. De-serialization is the opposite of same.

If you’re using pickle to serialize and deserialize your data in Python 2, you’d want to keep certain protocol changes in mind. Here’s a graphic listing the changes and compatibility guidelines —

Pathlib Module

Python 3.4(PEP-428) introduced the new pathlib module in STL with the simple idea of handling filesystem paths(usually done using os.path) and common operations done on those paths in an object oriented way.

Stating from the release documentation —

The new

pathlibmodule offers classes representing filesystem paths with semantics appropriate for different operating systems. Path classes are divided between pure paths, which provide purely computational operations without I/O, and concrete paths, which inherit from pure paths but also provide I/O operations.

Let’s take a look at a simple example using this new API —

Enum Type(PEP-0435)

Python 3.4 release added an enum type to standard library. It has also been backported upto Python 2.4 so chances are you might already be familiar and using it in the code. Normally, you’d create a custom class to define enumerations like this —

class Color:

RED = "1"

GREEN = "2"

BLUE = "3"

With enum it’ll look like —

from enum import Enumclass Color(Enum):

RED = "1"

GREEN = "2"

BLUE = "3"

The difference between the two approaches being that enum metaclass provides methods like __contains__(), __dir__(), __iter__() etc. which aren’t there in a custom class.

Statistics(PEP-0450)

Python is also popular in science and statistics fields. To acknowledge the importance, Python 3.4 introduced a new statistics module in the standard library to make scientific use-cases easier.

As the module documentation suggests, it is not intended to compete with libraries like NumPy, SciPy or other professional proprietary software like Matlab. It is aimed at the level of graphing and scientific calculators. It provides simple functions for calculation of the mean, median, mode, variance and standard deviation of a data series.

Secrets(PEP-0506)

Googling for “python how to generate a password” returns stackoverflow answers and tutorials suggesting the use of random module whose documentation warns to not use its pseudo-random generators for security purposes. Developers may not go through the documentation or may not be aware of the security implications of using random to generate passwords and auth tokens.

This attractiveness of random module became the rationale of the new secrets module added in Python 3.6. It provides the functionality to generate secured random numbers for managing anything that should be a secret. Let’s look at couple of simple examples to generate passwords and auth-tokens—

Standard Library Reorganization

Automated Tools

This section details and compares popular automatic tools used for Python 2 to 3 conversion. They can save a lot of your time. You’d not want to manually check every print statement or string literal in your code. The least these tools can do is to highlight what all places would need the chance in order to be compatible with Python 3.

2to3

2to3 is a python script that applies a series of fixers to change Python 2 code to valid Python 3 code directly. Let’s take a look at a small example taken from documentation —

#test.pydef greet(name):

print "Hello, {0}!".format(name)print "What's your name?"

name = raw_input()

greet(name)

Running 2to3 test.py gives out the diff against the original code. They can be persisted to file using the -w flag.

Each step of transforming code is encapsulated in a fixer. Each of them can be turned on/off individually using the -l flag. It is also possible to write your own fixers through lib2to3, the supporting library behind the tool.

The con with using 2to3 is that it is a one way street, you cannot make your code run both on Python 2 and 3 at the same time. It has to be running on 3, which might not be possible for huge code bases to shift to right away.

2*3 = Six

In the migration process, most of the codebases running Python 2 on production, would want their code to keep running. Python 3 support can be introduced, but you still need your code to work on Python 2 as well.

Six library provides simple utility to wrap the differences between Python 2 and 3. It is intended to support codebases running on both Python 2 and 3 without modification. Let’s take a look at some basic utilities provided by six. A full list is available on six’s official documentation.

# Booleans representing whether code is running Python 2 or 3

six.PY2

six.PY3# In Python 2, this is long and int, and in Python 3, just int

six.integer_types# Strings data, basestring() in Python 2 and str in Python 3

six.string_types# Coerce s to binary_type

six.ensure_binary(s, encoding='utf-8', errors='strict')# Coerce s to str

six.ensure_str(s, encoding='utf-8', errors='strict')# Six provides support for module renames and STL reorganization too# To import html_parser in Python 2(HTMLParser) and 3(html.parser)

from six.moves import html_parser# To import cPickle(Python 2) or pickle(Python 3)

from six.moves import cPickle

Modernize

python-modernize is a utility based on 2to3, but uses six as a runtime dependency to give out a common subset of code running both on Python 2 and Python 3. It works in the same way as 2to3 command line utility.

Python Future

python-future is a library which allows you to add the missing compatibility layer between Python 2 and 3.x with minimal overhead. You would have a clean codebase running on Python 3 as well as Python 2.

It provides future and past packages with back ports and forward ports of features from Python 3 and 2. It also comes with the command line utilities similar to 2to3 named futurize and pasteurize which let you automatically convert code from Python 2 or Python 3 module by module, to maintain a clean Python 3 style codebase supporting both 2 and 3.

python-future and futurize is similar to six and python-modernize but it is a higher level compatibility layer than six. It has the further goal of allowing standard Python 3 code to run with almost no modification on both 3 and 2. We’ll look at the differences towards the end.

Let’s check out some simple examples of making code compatible using python-future —

futurize works in two stages — It first applies the 2to3 fixtures to convert Python 2 code to 3 and then adds the __future__ and future module imports so that it works with 2 as well. It also handles the standard library reorganization(PEP-3108).

The Silver Bullet

These tools might seem like a silver bullet to migrate the whole codebase running on Python 2 to Python 3, but there is a catch — They may not always do the right thing. For example, using python-future for a line of code where you’re iterating over elements of a dict using dict.itervalues() will convert it to list(dict.itervalues()) which defeats the whole purpose of returning views instead of lists for performance improvements.

If your application is performance critical, you might want to handle this in other ways. This is just one simple example, there would definitely be more where tool does something that works which you might have wanted to handle differently. The best way of using these tools would be to always to look at what all things they’ll change to a module, discuss the changes with the dev team for performance, readability, complexity and then writing them back to the file as needed.

Strategies for Migration

We’ll discuss two major approaches to the process of migration. The right one depends on factors like size of the codebase, developer bandwidth and need of functioning application at all times.

Rewrite the modules in Python 3 from scratch

If your application is small and can be refactored quickly, just starting fresh and re-writing the code using Python 3 could be one viable approach. It’ll allow you to use all the new features provided by Python 3 right away and the will give you the opportunity to re-write the pieces you’ve been wanting to improve since long.

It also helps with the overhead of writing and managing code compatible with both 2 and 3 and then you cannot use the new Python 3 features till your application hasn’t migrated completely.

A balanced approach

If the application is big, works on a decent scale and you’re with a large team working on different parts of the code base, we need a functioning application at all times.

You’ll also continue to push new features alongside a set team of developers working on migrating the code from 2 to 3. You’ll need to write cross-compatible code, but automatic tools will do it for you.

- Also discuss the importance of having unit tests here

- If there are unit tests for the existing code, they’d be super helpful for the migration

- Checking test case coverage using coverage tool

- Using Tox tool to run tests on multiple python versions

If the application is big, works on a decent scale and you’re with a large team working on different parts of the code base, you’d need a functioning application at all times. Product engineers would also want to push new features alongside a set team of developers working on migrating the code from 2 to 3.

Here are some high-level steps for beginning with the migration process —

- Ensure that all the new code which is being written, is Python 2 and 3 compatible with proper unit test case coverage.

- Plan the changes well. Decide a module, package or microservice you’d want to start with.

- Take a look at the dependencies and packages you’d need to upgrade for Python 3 support. Most of the packages updated versions support Python 3 or there would most likely a Python 3 alternative to those which have been abandoned.

- Use one of the automated tools or linters to identify the changes you’d need to make to your code. Discuss those with the team for the need, performance and complexity.

- Once you do a change compatible with both Python 2 and 3, make sure to fix the unit test cases to work with both Python 2 and 3. Use

toxto run your tests in the two separate Python environments.toxis discussed in the following section on importance of having tests during migrations. - Make sure the code going into the master branch passes the test cases written for both Python 2 and 3. It’d be helpful to add a new step of running all test cases in Python 3 in CI or release pipeline.

Usually, in most of the organizations, releases are done under some sort of feature flagging or percentage rollout to users on production instead of immediately rolling out to 100% of the traffic. Once the migration of a module is complete, it could be first released to the developers or users within the organization, then to beta and then to the rest of the users.

This helps as most of the bugs will be identified during initial development and beta phases itself. After the rollout is 100% done and is working fine, compatibility code can be deleted and you’d be able to use the new features of Python 3. This approach was taken by Instagram to migrate their huge codebase to Python 3 with zero downtime.

Importance of testing in migrations

Tests are an important part of software development life cycle. If your application has a good unit test case coverage, you can confidently refactor your code and not worry of repercussions.

In the migration from Python 2 to 3, an important first step would be to check the percentage of code covered using some tool(Ex — coverage.py) by unit tests and maximizing the same by writing more test cases. This investment speeds up the development cycle in long run. It’ll enable you to handle a change as small as a string update to as major as Python 3 to 4(which could be coming 😛)

After a decent test coverage, you could utilize tox — a package which lets you run tests on different python versions in isolated virtual environments. It can also be integrated with popular CI pipelines like Jenkins, CircleCI and Travis CI. You could also parallelize the whole test suite runs on different python versions using tox if it affects the speed of release process.

Conclusion

In this article we discussed the why and how of migrating from Python 2 to 3. We looked at what all good things Python 3 has to offer, their usage and advantages. We explored some automated tooling to help with migration process, two different strategies to approach the migration and their pros and cons.

Migration from 2 to 3 is a challenging but rewarding process. The result is a better codebase, improved developer productivity and performance. It would give you a solid foundation to scale for the next few years, at least. You’d also be able to contribute back to open-source Python community which is making sure it keeps getting better and better over time.